The fundamental difference between FPGA vs CPU lies in the difference between software and hardware; CPU is a Von Neumann structure, which executes a series of instructions serially; while FPGA can realize parallel operation, like embedding multiple CPUs in a chip, whose performance will be ten or a hundred times that of a single CPU. Generally speaking, the functions that can be realized by CPU can be realized by FPGA using the hardware design method. Of course, extremely complex algorithms implemented in hardware will be more difficult, resource consumption is also very large, if there is no high-performance requirements, then the hardware implementation is a bit more than worth it. For a complex system, a reasonable division of software and hardware, the CPU (or DSP) and hardware circuits (such as FPGA) to complete the system function is very necessary and efficient.

CPU is the abbreviation of “Central Processing Unit”, which is used for “computing”. Most of the electronic devices we are familiar with, such as cell phones, computers, the various functions they achieve, are through the CPU “computing” to realize. It is the brain of almost all electronic and digital devices. A computer is a computer because its brain, the CPU, is used for computing.

What is FPGA? Like the CPU, it is also used to compute. However, its calculation method is very different from the CPU, it is a semiconductor device based on integrated circuits, can be repeatedly burned program to achieve different functions and can also dynamically adjust the parameters.

FPGAs have been used as a low-volume alternative to Application Specific Integrated Circuits (ASICs) for many years, however in recent years they have been deployed on a large scale in the data centers of Microsoft, Baidu, and others to provide both powerful computing capabilities and sufficient flexibility.

FPGAs are often compared to application-specific integrated circuits (ASICs) and microcontrollers. ASICs are tailored for specific tasks and offer optimized performance, but lack flexibility. Microcontrollers, on the other hand, are general-purpose devices that are typically used for simpler tasks and controlled through software.

The advantage of FPGAs is their ability to adapt to a variety of tasks while maintaining high performance.FPGAs can be dynamically reconfigured and are well suited for applications that require flexibility and fast development cycles.

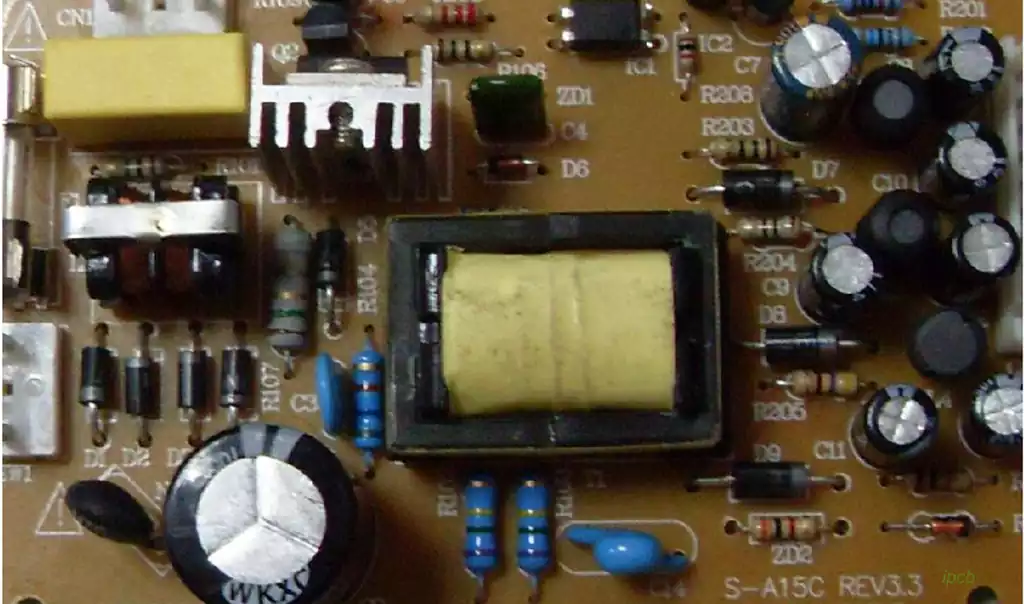

FPGA is mainly composed of the following parts:

- Logic Block

Logic blocks are the basic building blocks of an FPGA and contain programmable logic elements that can be configured to perform a variety of digital functions such as and, or, and iso-or gates. These logic blocks can be programmed to define their functions and connections to implement the desired digital circuitry.

The programmability of logic blocks is one of the characteristics of FPGAs, which gives them a high degree of flexibility and customizability.

- Interconnect

Interconnects are the “wires” that connect logic blocks together. They form a programmable routing matrix that allows flexible connections between different logic blocks and ultimately defines the functionality of the FPGA. - Input/Output Blocks

Input/Output (I/O) blocks enable the FPGA to communicate with external devices such as sensors, switches, or other integrated circuits. They can be configured to support a variety of voltage levels, standards, and protocols. - Configuration Memory

Configuration memory stores the programming data that defines how the FPGA’s logic blocks and interconnects are configured. When the FPGA is powered up, this data is loaded into the device, enabling it to perform its intended function.

Compared to CPUs, FPGAs are more parallel and flexible, providing deterministic latency( FPGA vs CPU)

Processors are responsible for processing data input from the outside world. The difference between processors such as CPUs, GPUs, and FPGAs lies in the processing flow, which makes CPUs specialize in serial computation, characterized by complex control, while GPUs and FPGAs are more adept at large-scale parallel computation:

CPU is a processor under the Von Neumann architecture, which follows the processing flow of “Fetch -Decode – Execute – Memory Access -Write Back”, where the data has to be accessed through the control unit to obtain the instructions in RAM, and then decoded to learn the instructions in RAM. The data is first processed through the control unit to obtain the instruction in RAM, then decoded to know what kind of operation the user needs to do on the data, and then sent to the ALU for the corresponding processing, and then saved back to RAM after finishing the operation, and then obtained the next instruction. This process, known as SISD (Single Instruction Single Data), determines that the CPU is good at decision making and control, but is less efficient in multi-data processing tasks. Modern CPUs can do both SISD and SIMD processing, but they are still not as efficient as GPUs and FPGAs on a parallel scale.

GPUs follow SIMD (Single Instruction Multiple Data) processing, where a unified processing method, i.e., the Kernel, is run on multiple threads to achieve highly parallel processing of data sent from the CPU. By removing the branch prediction, random execution, and memory prefetching modules of modern CPUs, and reducing the cache space, the simplified kernel in GPUs can realize very large-scale parallel operations and save the time most CPUs need to spend on branch prediction and rearrangement, but the disadvantage is that it requires data to be adapted to the processing framework of GPUs. However, the disadvantage is that the data needs to be adapted to the GPU’s processing framework, such as batch alignment, so maximum real-time performance is still not achieved.

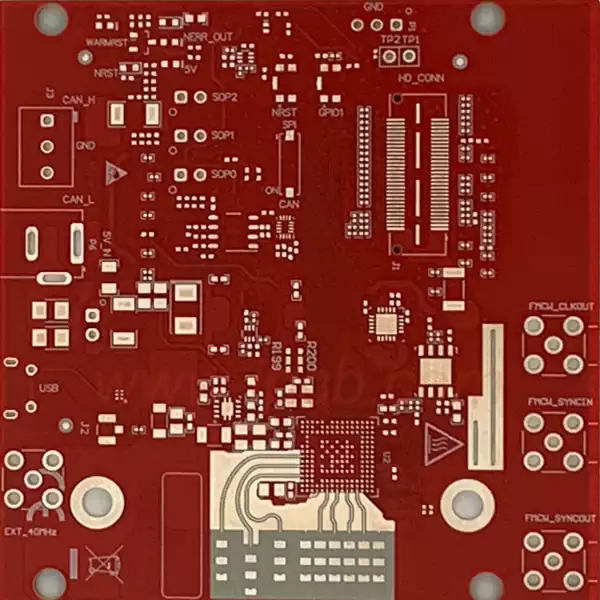

FPGAs, on the other hand, have a user-defined processing flow that directly determines how the on-chip CLBs are connected, and hundreds of thousands of CLBs can operate independently, i.e., SIMD, MISD (Multiple Instruction Single Data), and MIMD (Multiple Instruction Multiple Data) processing can all be implemented in the FPGA. Processing can be realized in FPGAs. Since the processing flow has been mapped to hardware, there is no need to spend extra time on acquiring and compiling instructions, and there is no need to spend time on steps such as chaotic execution like CPUs, which makes FPGAs have very high real-time performance in data processing.

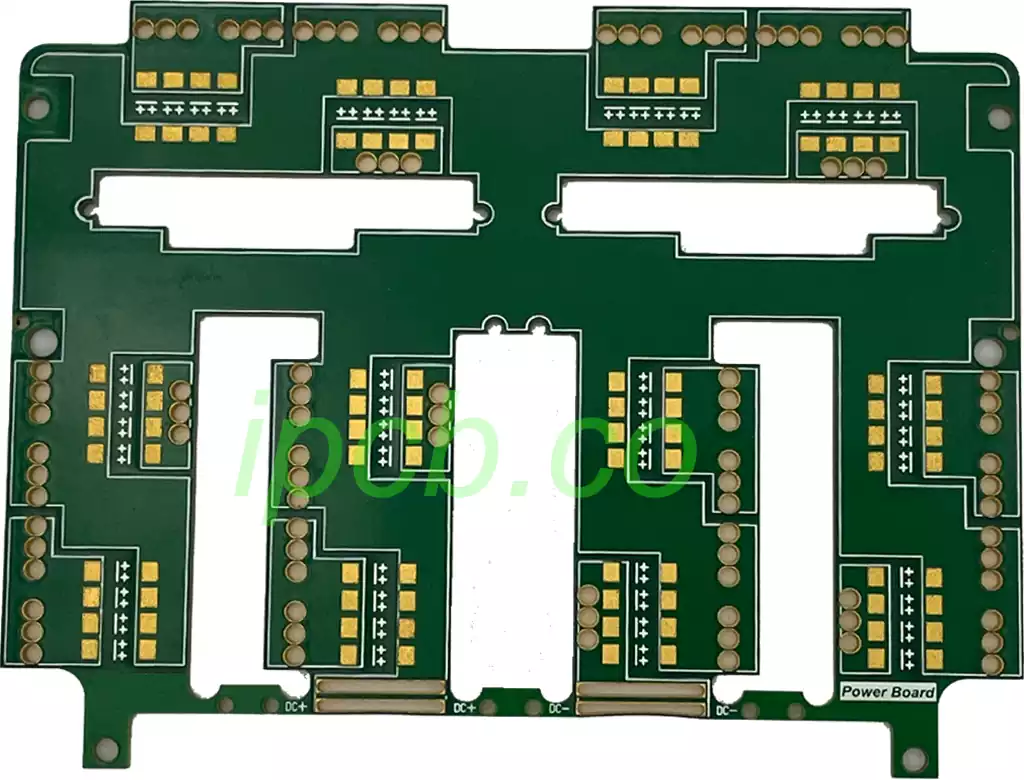

Therefore, both CPU VS FPGA are used as task offloading units for CPUs, and their efficiency in parallel computing is higher than that of CPUs. in the high-performance computing scenario of data centers, CPU VS FPGA often exist in the form of discrete accelerator cards, i.e., CPUs “offload” some of the intensive computing tasks to GPUs or FPGAs, and these “devices” can be used to perform the same tasks in the same way as CPUs. These “devices” are interconnected with the CPU via PCIe to accomplish highly parallel computing acceleration.

The great advantage of FPGA vs CPU is the deterministic low latency, which is caused by architectural differences. CPU latency is indeterminate, and when the utilization rate increases, the CPU needs to process more tasks, which requires the CPU to perform task rescheduling, thus resulting in processing latency that tends to increase uncontrollably, i.e., the more tasks there are, the slower the counting becomes. On the other hand, the reason why the delay of FPGA is certain is that at the layout and wiring stage, the design tools have already ensured that the worst path can meet the timing requirements, so there is no need to spend time on the steps required by the general-purpose processor such as acquiring instructions and decoding instructions, and the ensuing problems of rearranging the order of execution and waiting for the scheduling of instructions are also avoided.

The higher the CPU utilization, the higher the processing latency, whereas FPGAs have a stable processing latency regardless of the utilization; FPGAs can provide nanosecond processing latency, whereas CPUs are usually in the millisecond range. For example, in an autonomous driving system where camera data is transferred directly to the MIPI interface of the FPGA, the difference between the best and worst case processing latency is only 22ns, while in the case where the CPU is involved in the data transfer, the difference is more than 23ms, which is equivalent to doubling the latency of the CPU in a busy situation. In addition, when the utilization rate rises to 90%, the processing time of the CPU is as long as 46ms. For a car traveling at 100km/h, 46ms means that from the time the camera sees an obstacle to the time the car system takes braking action, the car has already driven a distance of at least 1.28m, while the FPGA is only 3m, which can be equated with an instantaneous response, and this 1.28m saved can reduce many collisions. This saving of 1.28m may reduce the probability of collision. Therefore, in automotive and industrial scenarios where low latency needs to be determined, FPGAs have a great advantage.

The biggest difference between FPGA vs CPU lies in the architecture, FPGA is more suitable for streaming processing that requires low latency, while GPU is more suitable for processing large-volume isomorphic data, FPGA and CPU work in tandem, FPGA for localized and repetitive tasks, and CPU for complex tasks.