AI server PCBs form the physical foundation and neural hub of artificial intelligence servers. They host and interconnect processors, high-bandwidth memory (HBM), high-speed network interfaces, and power supply systems, providing reliable, high-speed, low-latency signal and power transmission pathways for massive parallel computing. In today’s era of large-scale model training and inference, the complexity, manufacturing difficulty, and cost of a top-tier AI server PCB now exceed those of traditional server motherboards by a factor of tens.

Core Functions of AI Server PCBs

- Physical Support and Structural Integrity

Serving as the server’s ‘skeleton’, the AI server PCB must withstand thousands of kilograms of liquid cooling pipe pressure, the weight of dozens of GPUs and HBM modules, and deformation under extreme thermal stress. It functions not only as a component mounting platform but as the fundamental guarantee of the entire system’s mechanical stability. - High-Speed Signal Transmission Network

Modern AI servers routinely handle data transfer rates measured in terabytes per second, making PCB routing equivalent to a ‘light-speed expressway’. Superior PCB design minimises signal loss, crosstalk, and reflections to the utmost, ensuring high-speed links like NVLink, PCIe 6.0, CXL 3.0, and Ultra Ethernet operate stably at peak bandwidth and efficiency. This directly determines whether the cluster’s overall computing power can be fully utilised. - Thermal Management and Power Distribution

The power consumption of a single GB300 NVL72 circuit board now exceeds 140kW, with heat flux densities rivalling those on the surface of a nuclear reactor. Top-tier AI server PCBs commonly employ extreme thermal management techniques—including buried copper coins, copper inlays, direct-contact heat pipes, and even integrated liquid-cooled cold plates on the reverse side—to divert heat from the chip core via the shortest path. This constitutes a critical component in the efficiency of the entire liquid cooling system. - Scalability and Future Compatibility

Leading AI server PCBs reserve ample PCIe lanes, CXL interfaces, power module positions, and optical module slots. Some even adopt a ‘motherboard + daughter card’ pluggable design. This enables a single substrate to support current H100/H200/B200/B300/GB200 architectures while seamlessly upgrading to next-generation architectures like Blackwell Ultra and Rubin in the future, significantly protecting customer investment.

Technical Requirements for AI Server PCBs

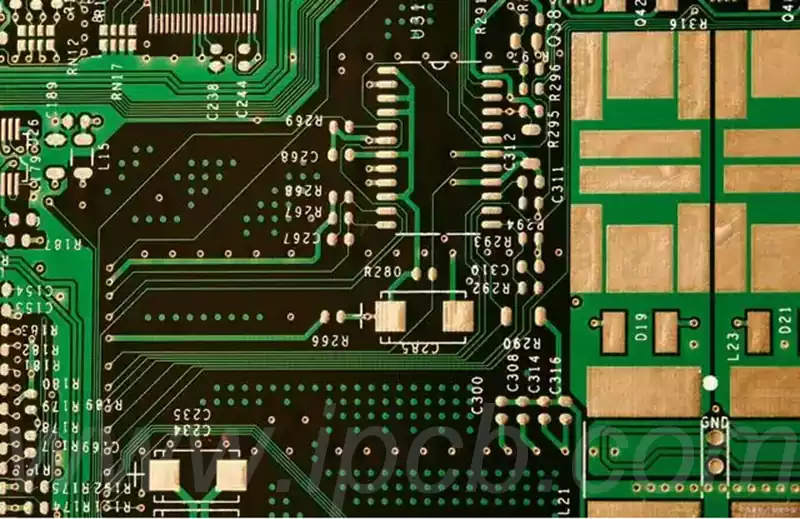

- Ultra-high-density integration

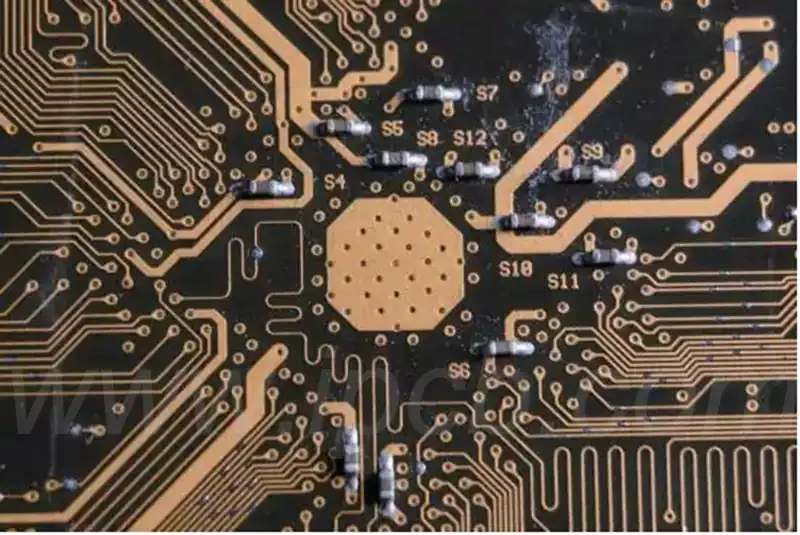

Current flagship products universally employ 28-40 layer PCBs + localised HDI blind/buried vias + 2.5D/3D silicon interposers/EMIB/CoWoS-R packaging. Trace width/spacing approaches 1-2μm, achieving terrifying densities of 72 GPUs + 36 CPUs + tens of TBs of HBM on a single board. - Ultimate Signal Integrity

Requires ultra-low-loss laminates such as Megtron 8/10 and Tachyon, coupled with precision impedance control, back-drilling, and blind-via electroplating fill processes. This controls insertion loss for 200+ Gbps high-speed signals to within -20 dB. - Exceptional Power Delivery Capability

Boards must support peak currents of 8000–15000A. This is achieved through 10oz (350μm) thick copper + embedded copper blocks + multi-layer power plane design, ensuring voltage drops remain within ±3% during GPU transient power surges. - Reliability and Longevity Assurance

Must pass 168-hour 150°C high-temperature ageing, 1000 cycles of -40°C to 125°C thermal shock, 85°C/85%RH high-humidity testing, and rigorous vibration/drop tests to meet 7×24 continuous operation requirements for over ten years without failure. - Forward-Looking Scalability Design

Reserves sufficient NVSwitch slots, optical module positions, and CXL memory expansion slots. Compatible with future 800G/1.6T optical interconnects, supporting flexible cluster configurations ranging from 8 to 144 cards.

The True Development Trends of AI Server PCBs in 2025

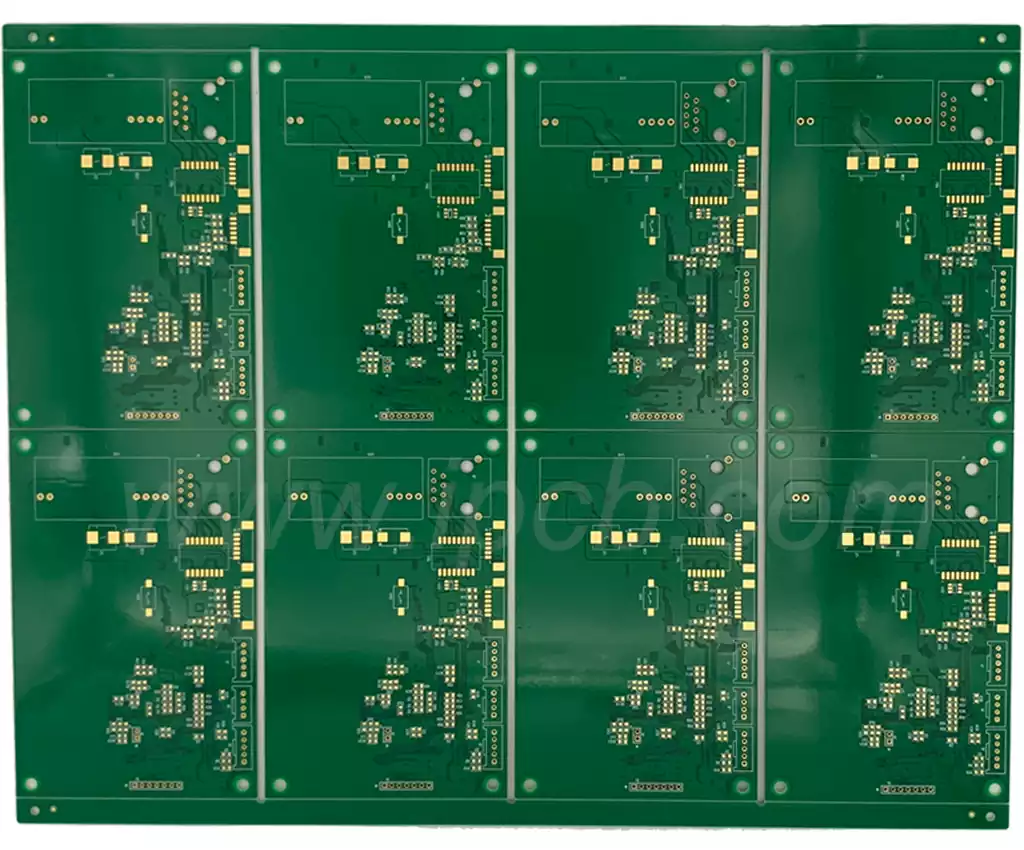

- Gigantism + Ultra-High Layer Counts

Board dimensions have evolved from the traditional 800×400mm to 1200×600mm or larger, with layer counts typically ranging from 36 to 48 layers, and exceeding 60 layers in localised areas. - Glass Core Substrates Enter Commercial Deployment

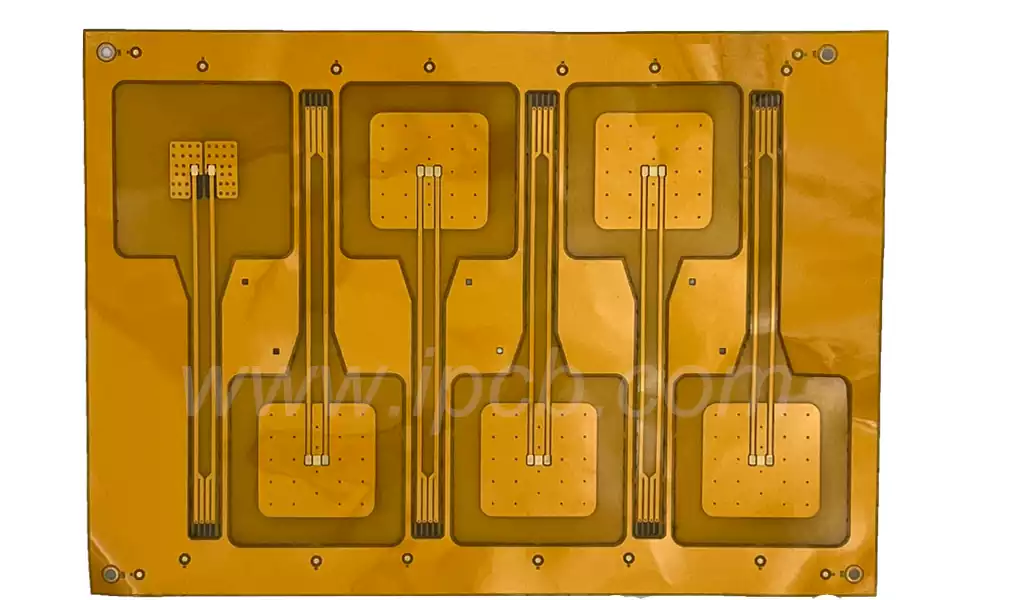

AMD, Intel, and Samsung have validated the mass-production feasibility of glass core substrates in AI server PCBs. Their thermal expansion coefficient perfectly matches silicon chips, warpage approaches zero, and they enable ultra-fine line widths of 0.8μm. These substrates are poised to become mainstream by 2026-2027. - Integrated Liquid-Cooled Cold Plate ‘Sandwich’ Structure

Huawei Ascend, NVIDIA GB300, and multiple domestic manufacturers have directly bonded or co-fired liquid-cooled cold plates to the PCB’s reverse side. This creates an ‘extreme cooling efficiency sandwich’ structure: front-side chips, reverse-side cold plate, and ultra-thick PCB in the middle. - Advanced Packaging Deeply Integrated with PCBs

Technologies such as CoWoS-R, EMIB, and silicon interposer layers are progressively being embedded within the PCB layer. PCBs are evolving beyond mere ‘interconnect boards’ to serve as substrates for massive multi-chip modules (MCMs). - Green Manufacturing and Sustainability

Lead-free, halogen-free, and low-carbon emission processes have become standard. Some manufacturers have achieved carbon neutrality in PCB production, utilising eco-friendly materials such as recycled copper foil and biodegradable solder resist inks.

In the true AI era, chips are merely the ‘heroes in the spotlight.’ Yet it is these AI server PCBs—costing hundreds of thousands or even millions per unit, with manufacturing complexity requiring national strategic support—that serve as the unsung champions propelling human computing power to new heights. Expensive, scarce, formidable yet elegant, they represent the pinnacle of contemporary industrial civilisation.